Home-notsohome lab setups

You can find a more up-to-date version of this article here: https://bench.squeaky.tech/infra/homelab/

Common management

I use Ansible for most of my management tasks:

- setup a new host (basic users, backup user, packages, ssh config, etc.)

- deployment of k8s apps

- management of my tinc configurations

- update workflow for some apps

- packages updates

- etc.

I also have an Ansible AWX setup in my home k3s cluster, which mainly permits me to schedule packages updates, and remotely run some playbooks.

From the various Ansible roles I use or used, I can list:

- mrlesmithjr.postfix for postfix config

- willshersystems.sshd for sshd config

- ahuffman.sudoers for sudo + sudoers files

- ahuffman.resolv for resolv.conf

- weareinteractive.ufw for UFW firewall config

- likg.repo-icinga for deploying icinga repository

- manala.kernel used to change some kernel stuff (modules, parameters, …)

- xanmanning.k3s to deploy k3s clusters easily (also handle updates)

And collections:

- community.zabbix for full zabbix deployment of server, proxy and agents

- kubernetes.core to deploy/get/etc. apps, also to use helm

I mainly use Ubuntu server LTS as OS, maybe one or two debians (like proxmox), two FreeBSD (one TrueNAS and one dedicated for databases).

I plan to make roles dedicated to use Ansible to manage my mikrotik switches (at least) in the future too, to make it easier to setup.

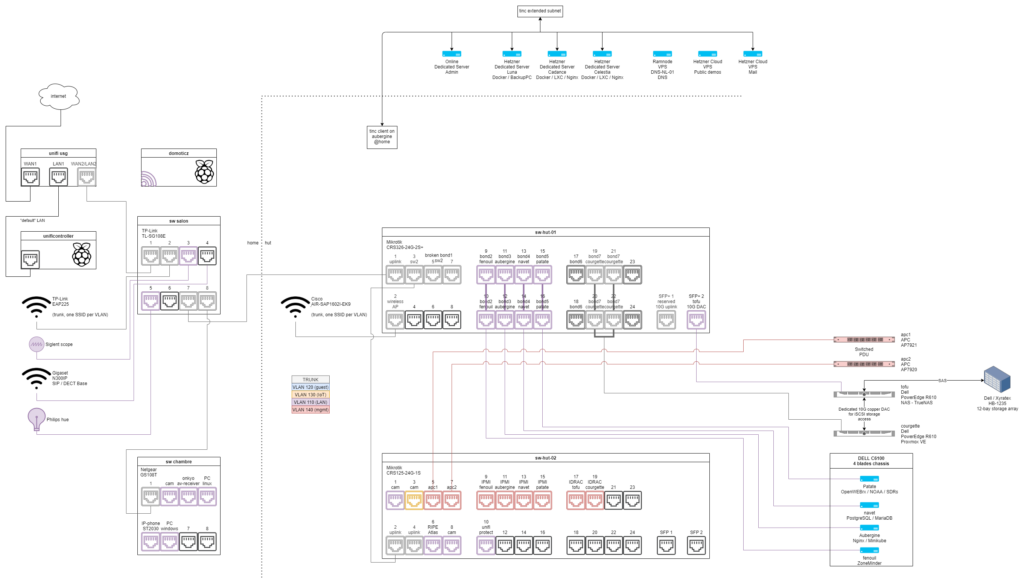

@ Home

For the home part I will describe the servers/lab part:

- Main mikrotik switch (CRS326-24G-2S+) with SFP+, all servers are connected on it with various bonds

- Secondary mikrotik switch (CRS125-24G-1S) no SFP+, used for less bandwith-hungry stuff like iDRAC, IPMI, etc.

- A Dell C6100 chassis with 4 blades

- fenouil: 2x Intel(R) Xeon(R) CPU L5520 @ 2.27GHz, 56G memory, Ubuntu server LTS, mainly runs Paperless and ZoneMinder

- aubergine: 2x Intel(R) Xeon(R) CPU L5520 @ 2.27GHz, 40G memory, Ubuntu server LTS, runs my main nginx reverse proxy, Asterisk, LibreNMS, a zabbix proxy and a single-node k3s cluster

- navet: 2x Intel(R) Xeon(R) CPU L5520 @ 2.27GHz, 42G, FreeBSD, runs PostgreSQL and MariaDB only

- patate: 2x Intel(R) Xeon(R) CPU L5520 @ 2.27GHz, 16G, Ubuntu server LTS, runs various hamradio related softwares (web sdr, airspy server, etc.)

- A Dell / Xyratex HB-1235, storage array, currently only have 5x1T drives on a ZFS Pool on Tofu, connected through SAS thingy directly between the chassis

- A Dell Poweredge R610, 2x Intel(R) Xeon(R) CPU E5507 @ 2.27GHz, 48G memory, runs TrueNAS, exports some SMB mounts, also exposes iSCSI (currently used from Courgette, using a direct 10G copper DAC)

- A Dell Poweredge R610, 2x Intel(R) Xeon(R) CPU E5507 @ 2.27GHz, 48G memory, runs Proxmox VE

- VMs storage is through iSCSI (tofu)

- CTs storage are LVM LVs which the PV is through iSCSI (tofu)

- There is an additionnal macbook on a shelf for FLOSS development if needed, also a dedicated Unifi Protect, a Cisco WiFi AP, Ripe Atlas probe, etc.

- The main gateway is an Unifi USG with separate controller on a raspberrypi

- There is also a raspberrypi running domoticz (mainly used for power usage logging)

To powers everything, there is two APC PDUs, that allows me to start network first, wait, then NAS, wait then all others servers.

No UPSes yet, but planned.

The wole rack uses around ~850/900W.

On the rack technical side, it is a Towerez rack, 19″, Open Frame, 32U, 60cm depth initially but the base has been modified to be 77cm or else the PowerEdge rails wouldn’t fit.

Proxmox VMs/CTs:

- poireau: GLPI (in process of removal), NetBOX

- curry: HomeAssistant, mosquitto (MQTT)

On the WiFi side, the TWO APs have exact same SSID, each mapped to their respective VLAN (one for lan, one for iot, etc.) except management vlan (140).

Pictures of the current rack setup:

In Datacenters

Admin server (Online, France):

- OpenLDAP + phpLdapAdmin, both in docker

- Icinga + IcingaWeb2

- Zabbix server

- PowerDNS (primary) + PowerDNS Admin

- InfluxDB + Grafana

Secondary DNS (RamNode, Netherlands):

- PowerDNS (secondary)

Cloud server for public demos (Hetzner Cloud, Falkenstein):

Cloud server for email (Hetzner Cloud, Helsinki):

- Selfhosted mailcow using docker.

And the three main servers, from the Hetzner server Auctions, all in Falkenstein:

- luna (Intel Core i7-2600, 16G ram, 2x3T)

- Docker for BackupPC only

- This server is mainly for backup

- cadance (Intel Xeon E3-1270V3, 32G ram, 2x2T)

- Docker (various services)

- LXC

- celestia (Intel Xeon E3-1246V3, 32G ram, 2x2T)

- Docker (various services)

- LXC

On the various services I have on docker are some redis servers, a mariadb, sentry, seafile, nextcloud, freshrss, rss-bridge, matrix homeserver, prosody xmpp server, etc.

The LXC are for some webhosting (php, gitea, etc.) services.

I also have a Portainer CE on aubergine (@ home) to manage the docker containers not converted yet to docker-compose.

Monitoring

I uses both Icinga2 and Zabbix, Icinga2 permits me to do more easily some checks, while Zabbix goes to do everything else.

On the long term, Zabbix will replace entirely icinga2, but for now, it’s easy for a lots of custom tests.

The specific monitoring through Icinga is mainly:

- check_dns_soa (on my own branch for serial checking) for checking that all my DNS servers are sync correctly

- check_freenas for my TrueNAS/FreeNAS

- chkNmapScanCustom.pl for checking open ports on my public IPs (used with a

-p-, runs every hours) - check_docker for docker containers

- check_backuppc for checking backup status done by my BackupPC

Here is my commands_custom.conf:

object CheckCommand "docker_backuppc_all" {

import "plugin-check-command"

command = "docker exec -u backuppc backuppc /home/backuppc/check_backuppc/check_backuppc"

}

object CheckCommand "docker_backuppc_one" {

import "plugin-check-command"

command = [ "docker", "exec", "-it", "-u", "backuppc", "backuppc", "/home/backuppc/check_backuppc/check_backuppc"]

arguments = {

"-H" = "$hostname$"

}

}

object CheckCommand "check_docker" {

import "plugin-check-command"

command = [ "check_docker" ]

arguments = {

"--containers" = {

value = "$docker_containers$"

}

"--present" = {

set_if = "$docker_present$"

}

"--cpu" = {

value = "$docker_cpu$"

}

"--memory" = {

value = "$docker_memory$"

}

"--status" = {

value = "$docker_status$"

}

"--health" = {

set_if = "$docker_health$"

}

"--restarts" = {

value = "$docker_restarts$"

}

}

}

object CheckCommand "check_zone_dns_soa" {

import "plugin-check-command"

command = [ "/usr/lib/nagios/plugins/check_dns_soa", "-4", "-v", "3", "-t", "10" ]

arguments = {

"-H" = "$soa_zone$"

}

}

object CheckCommand "check_allowed_open_ports" {

import "plugin-check-command"

command = [ "/usr/lib/nagios/plugins/chkNmapScanCustom.pl" ]

timeout = 5m

arguments = {

"-n" = "$nmap_path$"

"-i" = "$check_address$"

"-p" = "$allowed_ports$"

"-c" = "$nmap_args$"

}

vars.nmap_path = "/usr/bin/nmap"

vars.allowed_ports = ""

vars.nmap_args = "-p-"

}

object CheckCommand "check_freenas" {

import "plugin-check-command"

command = ["/usr/lib/nagios/plugins/check_freenas/check_freenas.rb"]

arguments = {

"-s" = {

value = "$freenas_host$"

description = "FreeNAS Host"

required = true

}

"-u" = {

value = "$freenas_username$"

description = "Username"

required = true

}

"-p" = {

value = "$freenas_password$"

description = "Password"

required = true

}

"-k" = {

set_if = "$freenas_selfsigned_ssl$"

description = "Selfsigned SSL"

}

"-z" = {

value = "$freenas_zpool$"

description = "Zpool name"

}

"-d" = {

value = "$freenas_dataset$"

description = "Dataset name"

}

"-i" = {

value = "$freenas_dataset_only$"

description = "Dataset regexp"

}

"-x" = {

value = "$freenas_dataset_exclude$"

description = "Exclude dataset"

}

"-m" = {

value = "$freenas_module$"

description = "Module for checks"

}

"-w" = {

value = "$freenas_warning$"

}

"-c" = {

value = "$freenas_critical$"

}

}

}With Zabbix I uses thoses templates (non-default ones):

- Tinc (custom, WIP, check if tinc is running, and test a ping to a remote IP in the tinc subnet)

- Template App PHP-FPM

- SMTP Service (custom, only checks if a SMTP service is running on :25)

- SMTP Service on localhost (same but with 127.0.0.1)

- DNS Resolution (custom, WIP, checks that the current resolvers works)

- Template Postfix Services

- Linux filesystems read-only (custom, WIP, checks if there is any read-only filesystems)

- Kubernetes

- FreeNAS (snmp)

- APC PDUs (still searching for the right template)

Not yet used but I need to:

Specifically for my home network, I also have a LibreNMS that I use mostly for networking and some graphs.

Networking

I have a private VLAN between luna, cadance and celestia.

There is also an “extended LAN” that uses Tinc in switched mode. There is a tinc client on at least admin, mail, luna, cadance, celestia, aubergine, and the bridge connected to it all have the same subnet.

The docker containers on luna, cadance, celestia, admin all have an IP in that subnet/lan.

Hosts also have one.

That allows me to use any service from anywhere using that private VPN/LAN without issues.

Future plans

I am thinking about converting the three main servers with docker to a k3s cluster, and having luna still as backup but with the control plane only.

Since k3s can run alongside everything else, I might do that soon, and migrate slowly stuff on it.

And for storage, maybe a distributed longhorn, I don’t know yet.

For home, the gateway is going to be replaced very soon by a Mikrotik Router, a RB4011iGS+RM, the switches on the home side are old 8 ports manageable, and the link between home/gateway and the hut/rack is 1G.

A 10G copper link will replace the 1G one, and the switches are going to be small mikrotik ones, probably putting the desktops on 10G copper too, and a gigabit switch for everything else (philips hue, AV receiver, etc.).

Thanks

For the reviews of this post to: